Remember the time, not so long ago, when the concepts of truth and falsity were the stuff of philosophy teachers?

It was the time when, in an institution like mine, the most curious students indulged in absurd thought experiments on themes that opposed the real and the unreal while the others, down to earth land, patiently awaited the end of the course in order to better mobilize their efforts for lessons in economics or law, likely to have some use in their lives. It was the good old days.

But now, with the arrival of artificial intelligence (AI), things have changed a lot. The March 29 letter, co-signed by several leading developers and intellectuals (Musk, Wozniak, Harari and others) calling for a moratorium on the development of AIs more powerful than GPT4, testifies to the depth of the societal upheavals that we must now reflect on. .

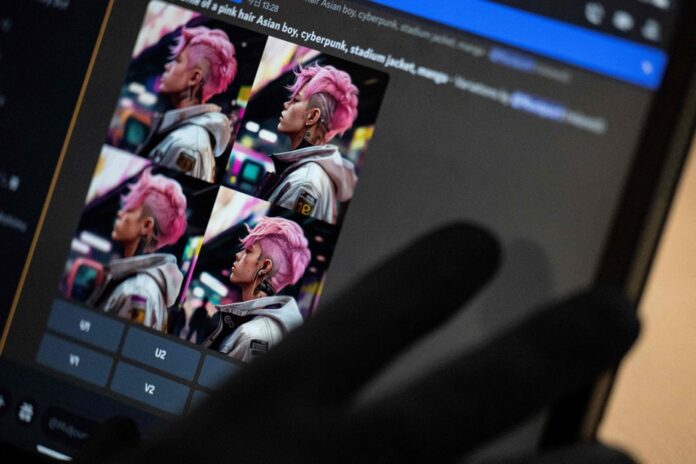

Whether we think of this viral image of the Pope wearing a stylized coat created by Midjourney or the interview featuring Justin Trudeau and Joe Rogan created with the help of another AI, the same observation is essential. . The risks of misuse are very real. Scenarios like the release of a video of Vladimir Putin announcing that World War III has begun or the arrival in your inbox of a convincing photo of yourself in action with a prostitute( e) no longer require technical progress as much as assembly work from existing possibilities.

When these AI-engineered images, videos, and text circulate through inboxes, newsfeeds, and social media, who will we trust? If many are talking about a possible civilizational downfall, it is, among other things, because of an attack on this concept that serves as the cornerstone of our societies: truth. We thought it was the business of philosophers, we realize, with the arrival of these entities, that it ensures social cohesion, allows the maintenance of the rule of law and participates in the proper functioning of the economy.

If the possibilities associated with malicious use of AIs do not worry you, you should know that it is absolutely not necessary to have people invested with a desire to do harm to produce deleterious effects. This is where things are more troubling.

The question of fraud and plagiarism within schools, extraordinarily facilitated by the arrival of AI, has rightly caused much ink to flow in recent months. One of the fundamental functions of the education system is to certify the acquisition of certain abilities and skills before someone is authorized to perform certain actions and occupy various critical functions in society.

However, Cégep à distance has the wind in its sails and currently accumulates tens of thousands of registrations annually1. How do you maintain fairness in a remote assessment process? What are degrees earned in such a way worth now? Within the walls of the very real establishment where I teach, several students recently confided to me, faced with their lower grades than those of their colleagues who had used AIs to carry out their work at home, that they too, future, would use their services. Are they bad students or young realists who rely, given the gap that prevails between the ways of doing things of an outdated system and the current world, on a relevant adaptive strategy?

Even more serious is the jovial attitude that has taken hold of many within our institutions. Thus we hear certain pedagogues proclaiming, these days, that the declarative knowledge to which we once granted importance must now be replaced by new capacities for critical thinking on which we should bank in the inevitable relationships that we will deal with these machines. What is googled would therefore no longer have a place in an examination.

A question like this… How the hell is a person supposed to be able to think critically about an answer obtained by an AI if they don’t have, at the base, a solid body of declarative knowledge necessary to the construction of a more elaborate knowledge, itself involved in the mobilization of this high-level intellectual capacity that is critical judgment?

The arrival of these AIs forces us to ask questions that we have swept under the rug for too long, those that concern the foundations of our society, that lay the foundations of the education system. What does this ideal we pursue of getting people to think for themselves mean in this new context? How exactly should thinking for oneself manifest itself? What place should we give to general culture? If it is technologically possible to virtualize learning and attach it to such technological powerhouses, is it desirable? What meaning should we give to this floating idea of adapting to AI?

Knowing that it is impossible for us to put the genie back in the bottle and that the handcuffs, if they hold, will not be able to contain it for long, it is urgent for our society to give itself guidelines. Adapting means nothing. Improvisation and tinkering cannot go on any longer. We need to determine what matters to us and develop a plan to get there.